Computational thinking and ChatGPT

Pensamiento computacional y ChatGPT

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

Copyright statement

The authors exclusively assign to the Universidad EIA, with the power to assign to third parties, all the exploitation rights that derive from the works that are accepted for publication in the Revista EIA, as well as in any product derived from it and, in in particular, those of reproduction, distribution, public communication (including interactive making available) and transformation (including adaptation, modification and, where appropriate, translation), for all types of exploitation (by way of example and not limitation : in paper, electronic, online, computer or audiovisual format, as well as in any other format, even for promotional or advertising purposes and / or for the production of derivative products), for a worldwide territorial scope and for the entire duration of the rights provided for in the current published text of the Intellectual Property Law. This assignment will be made by the authors without the right to any type of remuneration or compensation.

Consequently, the author may not publish or disseminate the works that are selected for publication in the Revista EIA, neither totally nor partially, nor authorize their publication to third parties, without the prior express authorization, requested and granted in writing, from the Univeridad EIA.

Show authors biography

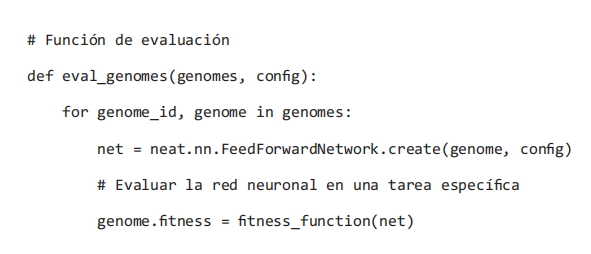

With the advent of natural language processing models, the way we usually refer to human language, of Large Language Models (LLM) type, such as Bidirectional Encoder Representations from Transformers (BERT), Language Model for Dialogue Applications (LaMDA), Large Language Model Meta Artificial Intelligence (LLaMA), or the so called Generative Pre-trained Transformers (GPT), users and the general public are beginning to generate any number of speculations and expectations about how far thy can go, and are also starting to explore new ways of use, some realistic, others creative, and others verging on fantasy. Computational Thinking is no exception to this trend. This is why it is of utmost importance to try to elaborate a clear vision about this innovative technology, seeking to avoid the creation and propagation of myths, which only make the perception and understanding that we have, and to try to find the right balance in terms of the reaches that can have this type of technological trends, and the ways of use that can be given to them, with special emphasis on Computational Thinking. In this paper we present a brief analysis of what Generative Pre-trained Transformer is, as well as some reflections and ideas about the ways in which Large Language Models can influence Computational Thinking, and their possible consequences. In particular, in this paper, we analyze the well-known ChatGPT, presenting an evaluation of the generated text outputs, and its credibility for its use in tasks of common use for Computational Thinking, such as performing an algorithm, its code and solving logical problems.

Article visits 142 | PDF visits 182

Downloads

- Anthony, L.F.W., Kanding, B. and Selvan, R. (2020). Carbontracker: Tracking and predicting the carbon footprint of training deep learning models. arXiv preprint arXiv:2007.03051.

- Champollion, J.F. (1828). Précis du système hiéroglyphique des anciens Egyptiens, ou Recherches sur les éléments premiers de cette écriture sacrée, sur leurs diverses combinaisons, et sur les rapports de ce système avec les autres méthodes graphiques égyptiennes avec un volume de planches. Imprimerie royale.

- Dalby, A. (2019). Rosetta Stone. WikiJournal of Humanities, 2(1), p.1. https://doi.org/10.15347/wjh/2019.001.

- Denning, P.J. and Tedre, M. (2019). Computational thinking. MIT Press.

- Devlin, J., Chang, M.W., Lee, K. and Toutanova, K. (2018). Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805.

- Eisenstein, J. (2018). Natural language processing. Available at: https://princeton-nlp.github.io/cos484/readings/eisenstein-nlp-notes.pdf [Accessed 14 June 2022].

- Jumper, J. et al. (2021). Highly accurate protein structure prediction with AlphaFold. Nature, 596(7873), pp.583-589. https://doi.org/10.1038/s41586-021-03819-2.

- Lodi, M. and Martini, S. (2021). Computational Thinking, Between Papert and Wing. Science & Education, 30(4), pp.883-908. https://doi.org/10.1007/s11191-021-00202-5.

- Mikolov, T., Chen, K., Corrado, G. and Dean, J. (2013). Efficient estimation of word representations in vector space. arXiv preprint arXiv:1301.3781.

- Mikolov, T., Sutskever, I., Chen, K., Corrado, G.S. and Dean, J. (2013). Distributed representations of words and phrases and their compositionality. Advances in neural information processing systems, 26.

- Quiroz-Castellanos, M., Cruz-Reyes, L., Torres-Jimenez, J., Gómez, C., Huacuja, H.J.F. and Alvim, A.C.F. (2015). A grouping genetic algorithm with controlled gene transmission for the bin packing problem. Computers & Operations Research, 55, pp.52-64. https://doi.org/10.1016/j.cor.2014.10.010.

- Radford, A., Wu, J., Child, R., Luan, D., Amodei, D. and Sutskever, I. (2019). Language models are unsupervised multitask learners. OpenAI blog, 1(8), p.9.

- Stanley, K.O. and Miikkulainen, R. (2002). Evolving Neural Networks through Augmenting Topologies. Evolutionary Computation, 10(2), pp.99-127. https://doi.org/10.1162/106365602320169811.

- Vaswani, A. et al. (2017). Attention is all you need. Advances in neural information processing systems, 30.

- Wertheimer, T. (2022). Blake Lemoine: Google despide al ingeniero que aseguró que un programa de inteligencia artificial cobró conciencia propia. BBC News, 23 July.